Playgrounds: Stop Guessing Which Model Works Best

You'll test a prompt dozens of times. Different models. Different wordings. Every time a new model launches.

Right now that means: Open ChatGPT. Run it. Open Claude. Copy-paste. Run it. Open Gemini. Copy-paste again. Screenshot results. Share in Slack.

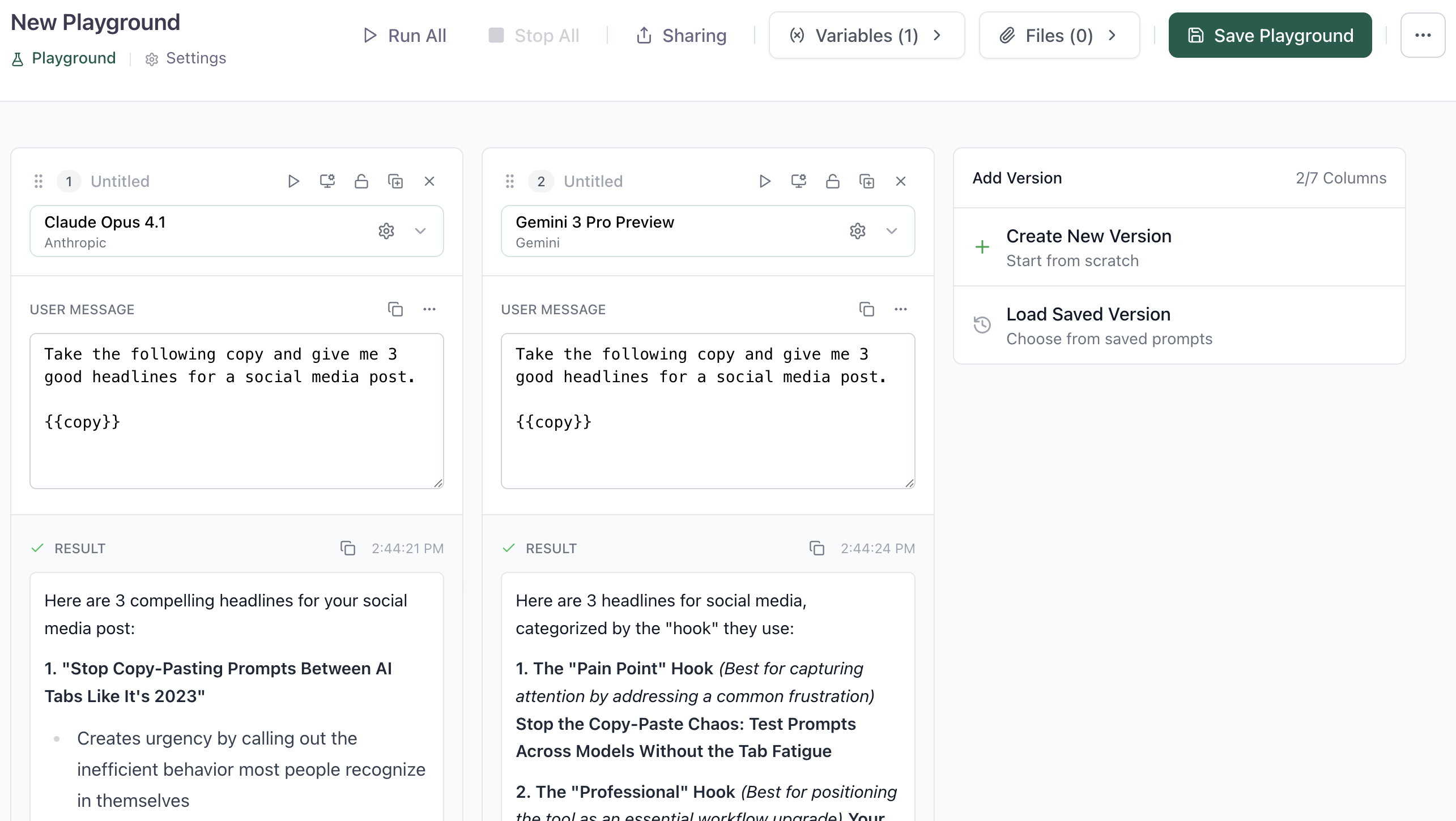

Playgrounds makes it automatic. Test across all models simultaneously. Compare results side-by-side. Save the complete test state. Share the link. Your team sees what you tested and can iterate from there.

Test Across Every Model Instantly

Pick a prompt from your library or start from scratch. Select models from a dropdown. Run them all. GPT-4, Claude, Gemini, whatever you need. When GPT-5 drops next month, add it to the grid and retest. Same workflow. No more copying prompts between ChatGPT, Claude, and Gemini tabs.

Compare Iterations Side-by-Side

You tweaked the wording. Did it help? Load both versions into separate columns. Hit "run all." The outputs appear side-by-side. You see which one works better.

Built for Production Level Prompting

Load Any Prompt Version

Access your entire prompt library and version history. Test current versions or roll back to compare against previous iterations.

Run All at Once

Test multiple prompt variations across multiple models simultaneously. No more running tests one at a time.

Visual Diff Comparison

See outputs side-by-side in separate columns. Spot differences immediately instead of trying to remember what changed.

Collaborative Review

Share complete testing states as links. Your team sees the full context—prompts, configs, and results—ready to iterate.

Stop Testing Prompts in Browser Tabs

Explore Other Parts of Aisle

Multi-Model Chat

One interface for your whole team to interact with all leading AI models. Stop managing multiple subscriptions.

Learn moreReusable Prompts

Build reusable AI components that your whole team can use. Deploy to chat, API, or workflows.

Learn moreInteractive Workflows

Build complex AI processes without code. Chain prompts, integrate systems, and automate decisions.

Learn more